Short Bio

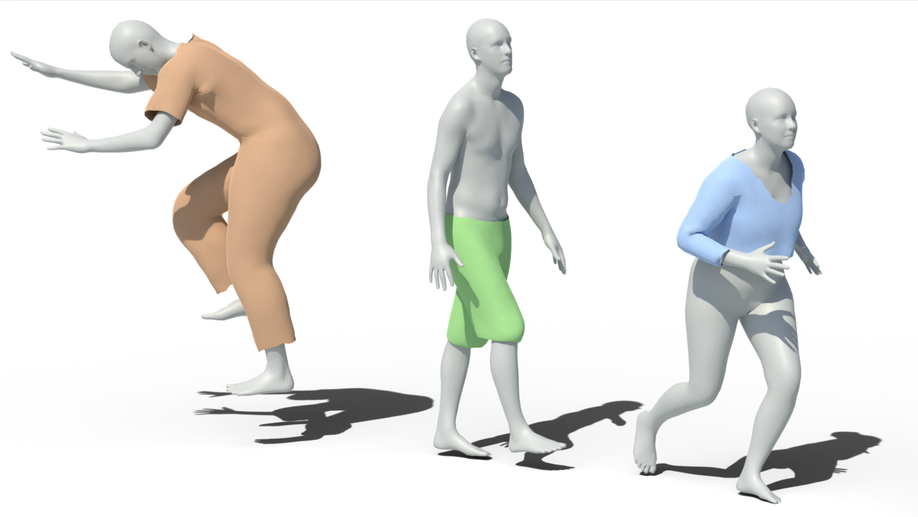

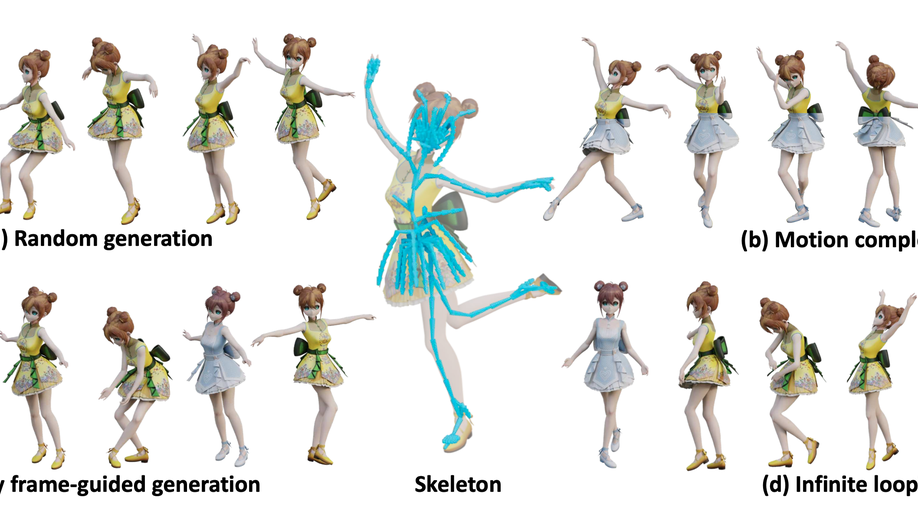

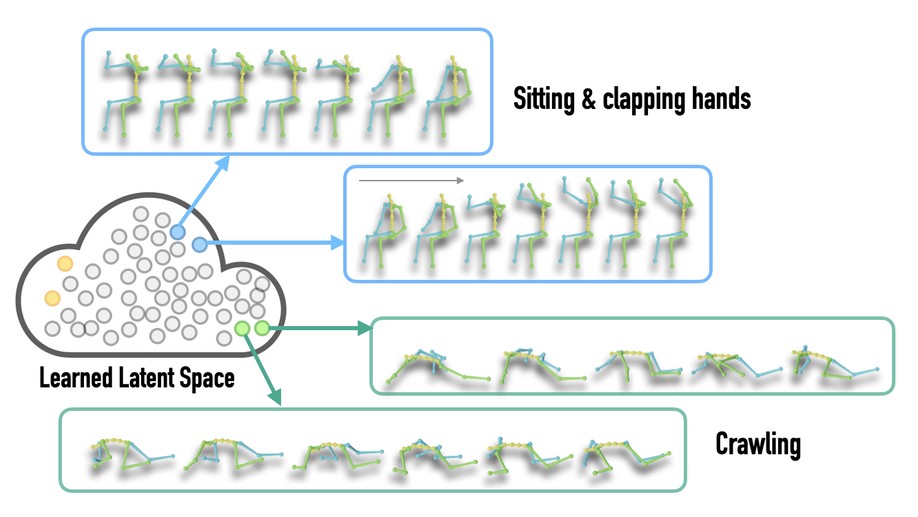

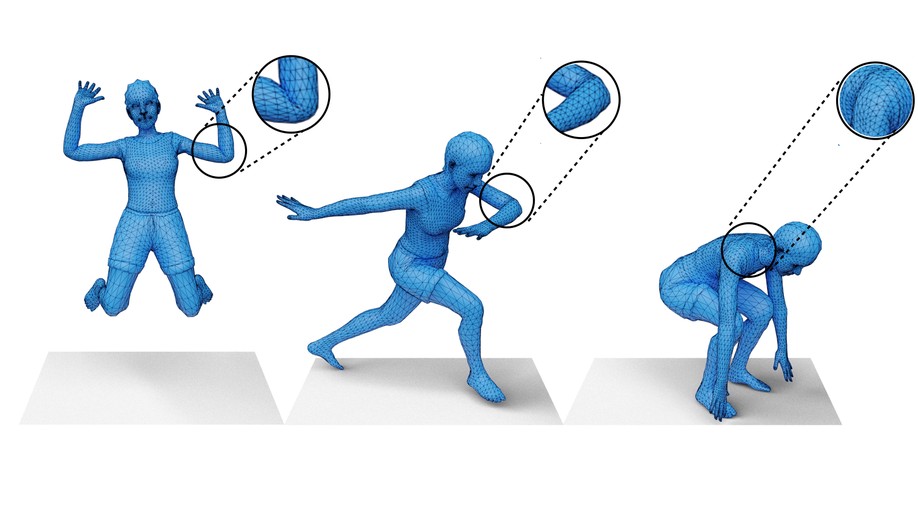

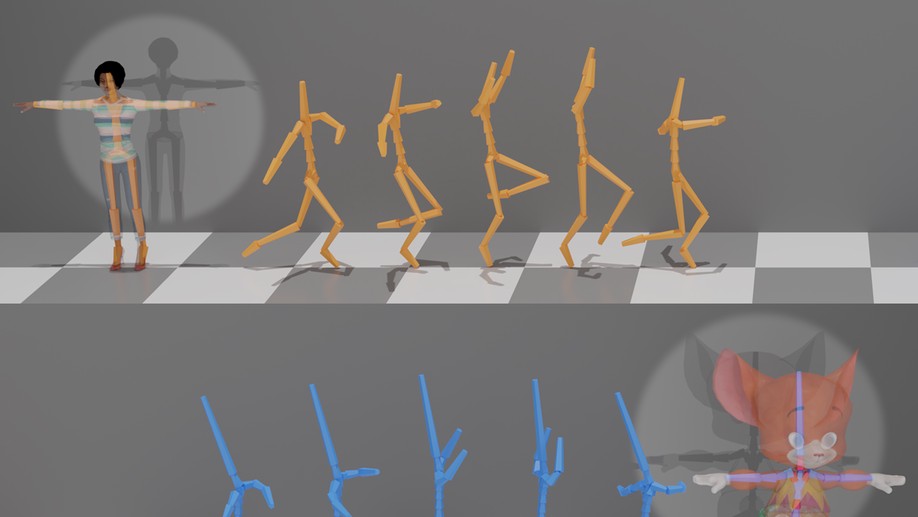

My name is Peizhuo Li (李沛卓). I am a direct doctorate student at Interactive Geometry Lab under the supervision of Prof. Olga Sorkine-Hornung. My research interest lies in the intersection between deep learning and computer graphics. In particular, I am interested in practical problems related to character animation. Prior to my PhD study, I was an intern at Visual Computing and Learning lab at Peking University and advised by Prof. Baoquan Chen.

Interests

- Computer Graphics

- Character Animation

- Deep Learning

Education

-

Direct Doctorate, 2021 ~ Present

ETH Zurich

-

BSc in Computer Science, 2017 ~ 2021

Turing Class, Peking University