Pose-to-Motion: Cross-Domain Motion Retargeting with Pose Prior

Abstract

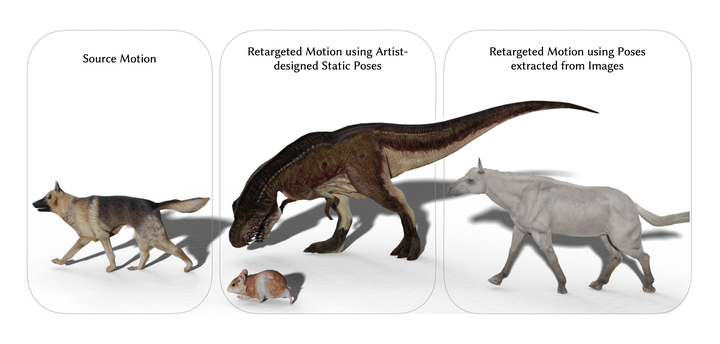

We introduce a neural motion synthesis approach that uses accessible pose data to generate plausible character motions by transferring motion from existing motion capture datasets. Our method effectively combines motion features from the source character with pose features of the target character and performs robustly even with small or noisy pose datasets. User studies indicate a preference for our retargeted motions, finding them more lifelike, enjoyable to watch, and exhibiting fewer artifacts.

Type

Publication

Symposium on Computer Animation (SCA) 2024, Computer Graphics Forum